Fabrizio Gotti’s NLP Projects

I

have the pleasure of tackling exciting real-world challenges in NLP, surrounded

by the best researchers in academia. Here are some of these projects.

Large language models

(LLMs) to support Retraite Québec’s customer relationship

management

2024

Principal developer: Fabrizio

Gotti

In a

preliminary study, I explored the use of Large Language Models (LLMs) to

support Retraite Québec’s customer relationship management employees in

answering customer emails. The project involved implementing and comparing leading

LLMs: OpenAI Assistants with File Search, Google Vertex Agents, and Microsoft’s

Copilot. I also implemented bespoke solutions to investigate how to customize

LLMs to the task, using OpenAI and Llama 3 (on-prems). The goal was to enhance

the efficiency and accuracy of responses to customer inquiries by implementing

retrieval augmented generation (RAG). By leveraging these advanced AI models,

the study aimed to improve customer service interactions and reduce response

times. The findings indicated potential benefits in using LLMs for this

purpose, paving the way for further research and development in this area.

In a

preliminary study, I explored the use of Large Language Models (LLMs) to

support Retraite Québec’s customer relationship management employees in

answering customer emails. The project involved implementing and comparing leading

LLMs: OpenAI Assistants with File Search, Google Vertex Agents, and Microsoft’s

Copilot. I also implemented bespoke solutions to investigate how to customize

LLMs to the task, using OpenAI and Llama 3 (on-prems). The goal was to enhance

the efficiency and accuracy of responses to customer inquiries by implementing

retrieval augmented generation (RAG). By leveraging these advanced AI models,

the study aimed to improve customer service interactions and reduce response

times. The findings indicated potential benefits in using LLMs for this

purpose, paving the way for further research and development in this area.

Implementation:

Python scripts to implement retrieval-augmented generation of emails, notably in

order to implement an artificial “service script”. APIs from OpenAI, Google, Microsoft

(Copilot and Custom question answering). On-premises:

Llama 3, Weaviate’s Verba.

Axel: Socially assistive

robot to alleviate social isolation among seniors through empathetic dialogue

2023–2024

Principal researchers: Jian-Yun Nie and François

Michaud (Université de Sherbrooke)

A project helmed by Fondation Sunny

Socially Assistive Robots are considered potential solutions to support

the independence and well-being of older adults. This project, initiated by the

not-for-profit organization Fondation Sunny, aims at creating

such a robot, capable of empathetic conversations with seniors. The robot’s

hardware and software were designed and tested by the Interdisciplinary Institute for

Technological Innovation (Université de Sherbrooke), and the RALI

laboratory was tasked with implementing empathetic conversations with large

language models, both on-premises (with Llama) and in the cloud (OpenAI). The

project also involved the Research Centre on Aging (RCA) of the Université de

Sherbrooke.

Socially Assistive Robots are considered potential solutions to support

the independence and well-being of older adults. This project, initiated by the

not-for-profit organization Fondation Sunny, aims at creating

such a robot, capable of empathetic conversations with seniors. The robot’s

hardware and software were designed and tested by the Interdisciplinary Institute for

Technological Innovation (Université de Sherbrooke), and the RALI

laboratory was tasked with implementing empathetic conversations with large

language models, both on-premises (with Llama) and in the cloud (OpenAI). The

project also involved the Research Centre on Aging (RCA) of the Université de

Sherbrooke.

In the context of this

project, I mainly worked on fine-tuning and evaluating empathetic large language

models using Facebook’s Llama 2 and 3, and implemented an API to serve this model.

Photo credit: Interdisciplinary Institute for

Technological Innovation, Université de Sherbrooke.

Identification of New

Psychoactive Substances Illicitly Sold to Canadians

2023–2024

Principal researcher: David Décary-Hétu

The

aim of this project is to identify unknown new psychoactive substances (NPS)

that are offered for sale to Canadians. To achieve this goal, this project aims

to find discussions and advertisements online that are related to NPS, both on

the internet and on the dark web.

This

project involves four main activities: 1) the development of algorithms to

detect new psychoactive substances in online conversations; 2) the monitoring

of marketplaces; 3) the monitoring of discussion forums and; 4) the detection

of new psychoactive substances using machine learning algorithms.

CLIQ-ai: Consortium for

Computational Linguistics in Québec

Since 2020

Principal researcher: Jian-Yun Nie

Since 2020, my colleagues and I have been building CLIQ-ai, the

Computational Linguistics in Québec consortium, bringing together experts from

academia and industry in Quebec, whose collaboration advances natural language

processing technologies. An initiative of IVADO, this research group counting

10 academics and 13 industry partners, will ultimately contribute to the

advancement of NLP by investigating how knowledge

engineering and reasoning can be used in NLP models when data is scarce. We

will focus on three research axes: Information

and Knowledge Extraction (e.g. Open Information Extraction), Knowledge modeling and reasoning

and applications of this research, mainly in question answering and dialogue

systems (“chatbots”). I act as a scientific advisor in NLP and help write the

grant applications to fund this consortium.

Since 2020, my colleagues and I have been building CLIQ-ai, the

Computational Linguistics in Québec consortium, bringing together experts from

academia and industry in Quebec, whose collaboration advances natural language

processing technologies. An initiative of IVADO, this research group counting

10 academics and 13 industry partners, will ultimately contribute to the

advancement of NLP by investigating how knowledge

engineering and reasoning can be used in NLP models when data is scarce. We

will focus on three research axes: Information

and Knowledge Extraction (e.g. Open Information Extraction), Knowledge modeling and reasoning

and applications of this research, mainly in question answering and dialogue

systems (“chatbots”). I act as a scientific advisor in NLP and help write the

grant applications to fund this consortium.

Industrial Problem Solving

Workshop 2021: Environment & Climate Change Canada

“Development of a weather text generator”

August 2021, in collaboration with Environment Canada and the Centre de Recherches Mathématiques

Weather forecasts include many ways

to express possible forecasts. However, the texts of the forecasts are very structured

and very limited in their formulation. In addition, there is only one “good”

standard way of reporting the weather considering a given set of concepts. In

order to continue to provide quality weather forecast and information services

to Canadians, the MSC wishes to develop a weather forecast text generator, in

English and French, which uses meteorological concepts representing, in coded

form, the weather forecast.

Weather forecasts include many ways

to express possible forecasts. However, the texts of the forecasts are very structured

and very limited in their formulation. In addition, there is only one “good”

standard way of reporting the weather considering a given set of concepts. In

order to continue to provide quality weather forecast and information services

to Canadians, the MSC wishes to develop a weather forecast text generator, in

English and French, which uses meteorological concepts representing, in coded

form, the weather forecast.

Our solution, developed

during the week of the workshop, employed a deep learning sequence-to-sequence

(seq2seq) model that translates meteorological data into text, focusing on a

subset of the problem: the text for the temperature forecast. We achieved a

very promising BLEU score of 76%. See our presentation here.

Website

for the 2021 edition of the workshop

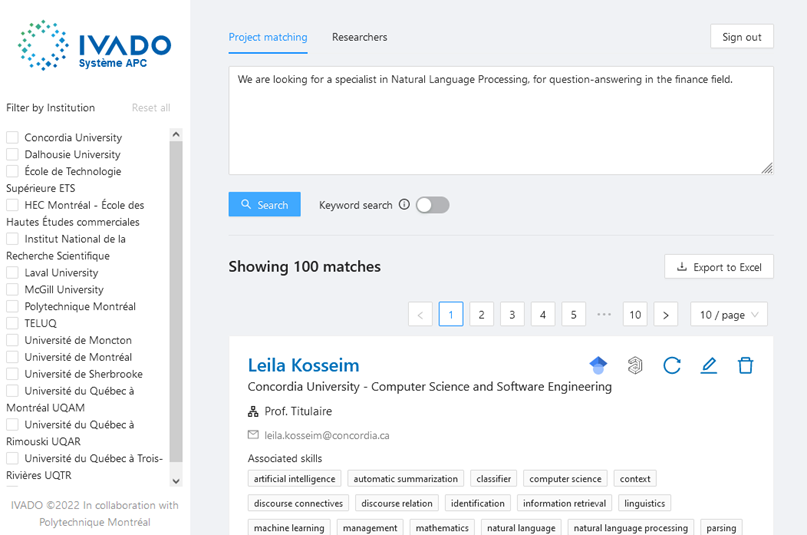

APC

System: NLP for Matching Researchers’ Interests with Industry Projects

2020-2022,

in collaboration with Polytechnique Montréal and IVADO

Leads:

Nancy Laramée, Lévis Thériault,

Lilia Jemai, Fabrizio Gotti

IVADO is a

Québec-wide collaborative institute in the field of digital intelligence,

dedicated to transforming new scientific discoveries into concrete applications

and benefits for all of society. As part of their mission, they match

researchers in artificial intelligence with industry projects and challenges.

With

IVADO’s Lilia Jemai, we led a team of fantastically talented Polytechnique

students to integrate NLP techniques in order to create a custom search engine

capable of matching the academics’s research interests with the project

descriptions. This “match-making” tool relied on bibliometric data retrieved

from Microsoft Academic (now defunct, replaced with OpenAlex) and Google Scholar, for all

researchers in Canada and elsewhere.

The chosen implementation,

in React and Python, leverages the spaCy NLP

framework and is deployed on IVADO’s Google Cloud Platform (Docker containers).

Here is a screenshot of the application.

Industrial Problem Solving

Workshop 2020: Air Canada

“Detection of recurring defects in civil aviation”

August 2021, in collaboration with Air Canada and the Centre de Recherches Mathématiques

Lead at Air Canada: Keith Dugas,

Manager, Connected Operations

Transport Canada mandates per the Canadian Aviation Regulation (CAR

706.05 and STD 726.05) that an Air Operator Certificate (AOC) holder must

include in its maintenance control system procedures for recording and

rectification of defects, including the identification of recurring defects.

Air Canada wished to detect recurring defects automatically that meets and

exceeds Transport Canada requirements for both MEL and Non-MEL defects.

Transport Canada mandates per the Canadian Aviation Regulation (CAR

706.05 and STD 726.05) that an Air Operator Certificate (AOC) holder must

include in its maintenance control system procedures for recording and

rectification of defects, including the identification of recurring defects.

Air Canada wished to detect recurring defects automatically that meets and

exceeds Transport Canada requirements for both MEL and Non-MEL defects.

Extensive pre-processing

was necessary because of the complexity of the data, including the (partial)

resolution of the multiple acronyms. Text classification vastly outperformed

direct clustering. The task and the data deserved much more work than possible

during the workshop week, but the preliminary results are promising.

Website

for the 2020 edition of the workshop

CO.SHS:

Open Cyberinfrastructure for the Humanities and Social Sciences

2017-2020,

in collaboration with Érudit, the leading digital dissemination platform of

HSS research in Canada

Leads:

Philippe Langlais and Vincent Larivière

CO.SHS is dedicated to supporting research in the

humanities and social sciences and the arts and letters in multiple ways.

Financed by the Canada Foundation for Innovation as part of the Cyberinfrastructure initiative (read the funding announcement), the project is overseen by Vincent Larivière, associate professor of information

science at the École de bibliothéconomie et des sciences de l’information,

holder of the Canada Research Chair on the Transformations of Scholarly

Communication and scientific director of Érudit.

CO.SHS is dedicated to supporting research in the

humanities and social sciences and the arts and letters in multiple ways.

Financed by the Canada Foundation for Innovation as part of the Cyberinfrastructure initiative (read the funding announcement), the project is overseen by Vincent Larivière, associate professor of information

science at the École de bibliothéconomie et des sciences de l’information,

holder of the Canada Research Chair on the Transformations of Scholarly

Communication and scientific director of Érudit.

The Allium prototype,

developed by the RALI (Philippe Langlais and Fabrizio Gotti,

Université de Montréal), aims to increase information discoverability in the

digital library Érudit through the open information extraction (OIE) and

end-to-end named entity recognition. It uses the semantics of the plain text of

journal articles to explore the deep syntactic dependencies between the words

of a sentence. The direct extraction of indexed facts allows to expand user

experience and offers the possibility of browsing based on concepts, themes and

named entities in context. The implementation of external links pointing to

knowledge bases such as Wikipedia further enriches the browsing experience.

See the

prototype in action on YouTube.

Butterfly Predictive

Project: Big Data and Social Media for E-recruitment

2014-2017,

in collaboration with Little Big Job Networks Inc.

Lead:

Guy Lapalme

The RALI at Université de Montréal

and its industrial partner LittleBigJob Networks Inc. develop a platform to

improve the process of recruiting managers and high level technicians by

exploiting Big Data on many aspects: improvement in harvesting and combining

public data from social networks; improvement in the matching process between

candidates and job offers; prediction of the success of a given candidate at a

new position. This project relies on Big Data analysis gathered by the

industrial partner both on the web and from its business partners while

complying with the privacy of the candidates and making sure that internal

recruitment strategies of industry are not revealed.

The RALI at Université de Montréal

and its industrial partner LittleBigJob Networks Inc. develop a platform to

improve the process of recruiting managers and high level technicians by

exploiting Big Data on many aspects: improvement in harvesting and combining

public data from social networks; improvement in the matching process between

candidates and job offers; prediction of the success of a given candidate at a

new position. This project relies on Big Data analysis gathered by the

industrial partner both on the web and from its business partners while

complying with the privacy of the candidates and making sure that internal

recruitment strategies of industry are not revealed.

EcoRessources:

Terminological Resources for the Environment

2014-2016, in collaboration with the Observatoire de linguistique Sens-Texte, OLST

Lead: Marie-Claude

L’Homme

EcoRessources is a comprehensive platform that brings together online

dictionaries, glossaries and thesauri focusing on the environment. Subjects

covered include climate change, sustainable development, renewable energy,

threatened species, the agri-food industry.

EcoRessources is a comprehensive platform that brings together online

dictionaries, glossaries and thesauri focusing on the environment. Subjects

covered include climate change, sustainable development, renewable energy,

threatened species, the agri-food industry.

A single query allows users

to quickly identify all the resources that contain a specific term and to

directly access those that can provide additional information. Languages

covered are English, French and Spanish. Try it!

Implementation: Custom-made web site built on Silex micro-framework, Bootstrap et al. The index is

built offline from a collection of databases provided by our collaborators in

various formats, then converted to XML. XSLT is used to perform the conversions

and the queries.

defacto: A Collaborative Platform for the

Acquisition of Knowledge in French

2014-2016,

in collaboration with personnel from DIRO and OLST

Lead:

Philippe Langlais

Humans can understand each other because

they share a code,

i.e. language, as well as common

knowledge. Machines do not possess this collection of facts, a lack

that significantly hinders their capacity to process and understand natural

language (like French). Various projects have been trying to fill this gap,

like the Common Sense Computing Initiative (MIT) or NELL (CMU), typically for the English language. The

defacto project is

interested in creating a French platform to acquire common sense knowledge. The

main originality of this project will be the implementation of an interactive

tutoring environment where the user will help the computer interpret French

text.

Humans can understand each other because

they share a code,

i.e. language, as well as common

knowledge. Machines do not possess this collection of facts, a lack

that significantly hinders their capacity to process and understand natural

language (like French). Various projects have been trying to fill this gap,

like the Common Sense Computing Initiative (MIT) or NELL (CMU), typically for the English language. The

defacto project is

interested in creating a French platform to acquire common sense knowledge. The

main originality of this project will be the implementation of an interactive

tutoring environment where the user will help the computer interpret French

text.

Implementation: I have written a preliminary

study (in French)

interested in the collaborative creation of linguistic resources in so-called

“serious games”. With Philippe Langlais, I modified ReVerb to parse French and extracted simple facts from

French Wikipedia. Top-level concepts were extracted from this data and put in

relation with instances in the corpus. To properly visualize the results, this

knowledge was plotted on an interactive graph created with Gephi and Sigmajs Exporter (with modifications).

![]() See a

screenshot of the preliminary results here.

See a

screenshot of the preliminary results here.

We devised an entity

classifier based on the relations these entities are involved in. For this, we

extracted millions of facts from the Erudit corpus and from French Wikipedia.

Our results have been published at the 2016 Canadian Artificial

Intelligence conference.

Suspicious Activity

Reporting Intelligent User Interface

2014-2015,

in collaboration with Pegasus Research & Technologies

Lead:

Guy Lapalme

Suspicious Activity

Reporting refers

to the process by which members of the law enforcement and public safety

communities as well as members of the general population communicate

potentially suspicious or unlawful incidents to the appropriate authorities.

SAR has been identified as one part of a broader Information Sharing

Environment (ISE). The ISE initiative builds upon the foundational work by the

US Departments of Justice and Homeland Security that have collaborated to

create the National Information Exchange Model (NIEM).

Suspicious Activity

Reporting refers

to the process by which members of the law enforcement and public safety

communities as well as members of the general population communicate

potentially suspicious or unlawful incidents to the appropriate authorities.

SAR has been identified as one part of a broader Information Sharing

Environment (ISE). The ISE initiative builds upon the foundational work by the

US Departments of Justice and Homeland Security that have collaborated to

create the National Information Exchange Model (NIEM).

The approach of the current

project is to introduce artificial Intelligence technologies: 1) to enhance

human-machine interactions, to get information into the system more rapidly and

also to make it more readily available to the users; and 2) for advanced

machine processing to data validation, fusion and inference to be performed on

data collected from multiple sources.

Implementation: Custom-made Java library using software from Apache Xerces™ Project to

parse IEPD specifications (XML Schema) in order to guide the creation of an

intelligent user interface. The IEPDs used are based on NIEM.

This video shows the XSDGuide prototype we created.

An article published in Balisage

2015 describes the prototype.

Zodiac: Automated insertion of diacritics in French [2013-2014]

Lead:

Guy Lapalme

Zodiac is a

system that will automatically restore accents (diacritical marks) in a French

text. Even though they are necessary in French to clearly convey meaning

(“interne” vs. “interné”) and to spell words correctly, diacritics are quite

frequently omitted in various situations. It may therefore be useful to

reinsert diacritical marks in a French text where these marks are absent. We therefore

coded Zodiac, a statistical natural language tool providing this feature. It is

based on a statistical language model and on a large French lexicon.

Implementation: In C++, with special care to ensure portability across Mac, Windows and

Linux platforms. We used the ICU library to process Unicode text. The language

model used is a trigram model trained with the SRILM library. The training corpus consists of 1M sentences

pertaining to the political and news fields. The model is compiled in binary

form with the C++ library KenLM in order to be loaded in memory in

0.1s. I also wrote a word add-in and a Mac service for Zodiac.

Cooperative approaches in

linguistic resource creation [2013]

RALI studied the creation

of linguistic resources using cooperative approaches (games with a purpose, microsourcing, etc.) at the

behest of the FAS. A report explaining the various possible strategies was

written and is available on RALI’s website (in French).

Automatic tweet

translation [2013]

In collaboration with NLP Technologies, we benefited from an engage grant from the NSERC to study tweet

translation. We focused on their collection and their particularities, in order

to create an automated translation engine dedicated to tweets emanating from

Canadian governmental agencies. An article published at LASM 2013 explains the project and the results

it yielded.

A web service published on

our servers allowed our partners to closely follow the development of the prototype.

A web interface (pictured below) offered a concrete demonstration platform of

the prototype.

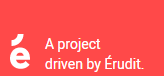

Interactive annotation in

judicial documents [2012]

An engage grant awarded by the NSERC gave us the

opportunity to collaborate with KeaText inc., a Montreal-based knowledge engineering

firm. The project consisted in using the UIMA framework to create and interact

with typed, semantic annotations in judicial corpora, for information

extraction.

We wrote a Web application

(see below) facilitating manual annotation, as well as a UIMA-based backend for

storing and manipulating these annotations. These modules used UIMA in an

innovative way and contributed to the adoption of these technologies by our

commercial partner.

Canadian sms4science

Project [2011-2012]

This research project will

help researchers better understand the language used in text messages and how

it helps build social networks. I worked on an automatic translator designed to translate SMS messages

into proper French.

The project is described here and the results of our work

is available here.

Multi-format Environmental Information Dissemination [2009-2012]

This Mitacs seed project explores new ways of customizing and translating the mass of daily information produced by Environment Canada. The project is explained here.

My efforts are focused on

the statistical machine translation of weather alerts (English to French and

French to English). A prototype of the system we are working on is available online.

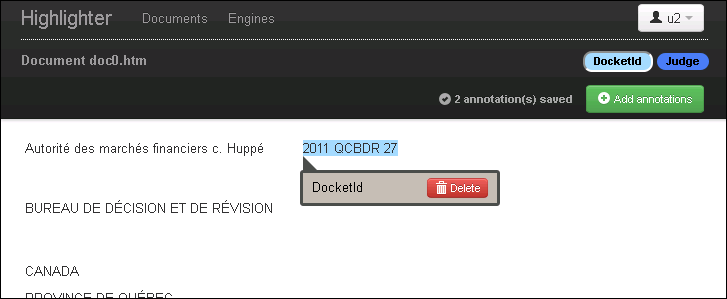

TransSearch 3 [2007-2010]

We are in the process of

overhauling the engine of TransSearch, our bilingual concordancer

used by professional writers to consult large databases of past translations.

This project is led in collaboration with our commercial partner, Terminotix Inc., which hosts the service.

We will offer TransSearch

users a new way to consult previous translations: instead of answering their

queries simply by presenting them with pairs of sentences (one in the source

language, the other in the target language), we want to highlight within these

pairs the source and target words they are looking for. This entails the

computation of word alignment between the source and target language

material.

A screenshot of the new

TransSearch (click to enlarge):

Collaboration with Druide

Informatique Inc. [2009]

We have worked with

Montreal-based Druide Informatique Inc. to use artificial intelligence

techniques to improve the precision of their popular French grammar checker

Antidote. Our efforts were successful and integrated into the Antidote HD

product. This is the topic of my master’s thesis.

Document Understanding

Conference (DUC) [2007]

In collaboration with the

Université de Genève (LATL), we developed a topic-answering and

summarizing system for the main task of DUC 2007. We chose to use an all-symbolic approach,

based on FIPS, a multilingual syntactic parser. We used XML and XSLT to

represent and manipulate FIPS’s parse trees.

ASLI: Intelligent system

for automatic synthesis and summarization of legal information [2007-2008]

For a project funded by PRECARN, the RALI, NLP Technologies and Mrs. Diane Doray develop a technology for automated analysis of

legal information in order to facilitate the information research in banks of

judgments published by legal information providers. The system includes a

machine translation algorithm for the French/English summaries and judgments.

Portail d’interrogation des corpus lexicaux québécois [2007]

The RALI helped create a new query engine for Quebec’s lexical corpora available on the website of the Secrétariat à la politique linguistique du Québec.

IdeoVoice II [2007]

The RALI is working with Oralys Inc. to augment their IdeoVoice product with a

speech-to-ideogram translation module to assist communication with

hearing-impaired, dysphasic and autistic persons. Ultimately, it is our hope

that this technology will bridge the language barrier using idea transference

rather than word-for-word translation.

3GTM (MT3G): A

3rd-Generation Translation Memory [2005]

The Montreal-based company Lingua Technologies Inc., in partnership with the RALI and Transetix Global Solutions Inc. in Ottawa, started the R&D

project 3GTM through funding by the Alliance Precarn-CRIM program at the

beginning of 2005. The project aims at the development and the marketing of new

computer-assisted translation software based on third-generation translation

memories. This tool that we are helping to develop will be able to recycle

previous translations intelligently.

My colleagues and I within

the RALI are working to find solutions to the numerous scientific challenges of

the project: statistical model creation, sub-sentential unit retrieval,

integration of the translation context, etc. A French presentation of the

project made for a seminar at the RALI is available here.

CESTA evaluation campaign

[2005]

To test the many language

tools and technologies at our disposal, and to improve them, the RALI also

participates in the CESTA evaluation campaign. It proposes a series of

evaluation campaigns of machine translation systems for different languages

(the target language is French). This project, started in January 2003, is

funded by the French Ministry of Research (Ministère de la Recherche français) under the Technolangue program.

Older

The exploration of the vast

and mysterious world of natural language processing also led me to research

word alignment technologies and the statistical models that underlie them.

Word-aligning Inuktitut and English proved particularly enlightening. Moreover,

we are currently interested in exploiting new translation subsentential units

called treelets, introduced by Quirk et al..